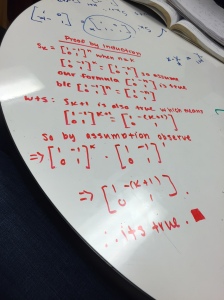

Today we continued our work with proofs by induction. I’m gonna be honest, I am NOT a fan of induction! I’m hoping that eventually I’ll have an “ah-ha” moment where it clicks in my brain, but until that happens I’m struggling with understanding what exactly to use as a base case and how to prove that if  is true, then so is

is true, then so is  . We began class by learning how you can use a contrapositive approach when using induction. To demonstrate this, Casey wrote out the goal of “standard” induction and then the goal of “contrapositive” induction:

. We began class by learning how you can use a contrapositive approach when using induction. To demonstrate this, Casey wrote out the goal of “standard” induction and then the goal of “contrapositive” induction:

Standard

Contrapositive

We also learned that the contrapositive approach to induction is referred to as the proof by least counter-example method. I liked writing the goals out in this notation because while I don’t really understand how to make induction work, this helps give me a visual representation of what I am trying to do. I assuming that like the “normal” contrapositive approach to proofs, this one can only be used when we’re given an “if-then” statement.

We then moved on to discussing the Fundamental Theory of Arithmetic, which is arguably the most important proof we’ve learned to date. The theorem says that any number can be written as the product of two prime numbers. For example,  and

and  . In more math-y terms, this can be expressed as:

. In more math-y terms, this can be expressed as:

We can read about this on page 164 in our textbook, which I have done, but still don’t quite understand it, so I’m going to look at it more in the near future in the hopes I can understand it better. We were told before reading it that the three things we need to prove this are: (1) strong induction (2) cases and (3) proof by least counter-example.

We then went over a proof about cows that basically shows that the induction method could actually be complete bullshit.

The proof basically says that all cows are brown, which obviously isn’t true, but the proof makes a pretty convincing argument. It’s just a tad bit trippy. Instead of rewriting the proof just like we did it in class, I will replace the cows with something a little more exciting…LIGHTSABERS! In honor of my boy Obi-Wan, I am going to claim that all lightsabers are blue.

Proposition

All lightsabers are blue.

Proof

Base case: {one blue lightsaber}

Inductive step: Suppose whenever someone has a set of  lightsabers, that all

lightsabers, that all  are known.

are known.

WTS that in any set of  lightsabers, all

lightsabers, all  lightsabers are blue.

lightsabers are blue.

Let  be any set with

be any set with  lightsabers.

lightsabers.

Consider that

By assumption this would mean that all lightsabers in  are blue.

are blue.

Consider

Thus, our hypothesis implies that the lightsabers in  are blue.

are blue.

is blue

is blue

all lightsabers in

all lightsabers in  are blue.

are blue.

*****the base case could have been something like “the same color” instead of blue*****

We know that this isn’t true because there are other options for lightsabers (purple, green, red,…) but the proof above is still a relatively solid proof. This is why some mathematicians are skeptical about using induction to prove things. However, I can see how induction is an important way to think about proofs even if I don’t yet fully understand it.

After this, we just spent the rest of class working on homework, which I didn’t really make much headway on. I plan on blogging more about this later, so in order to avoid repeating myself, that’ all for now.

Peace

Emily

, is a relation,

such that

. In essence,

is a well-defined rule that associates to every

some

latex {f}({a})={b}$.

, which violates the vertical line test.

is represented by

whereas the co-domain is represented by

. In more mathy language:

.

and

are equal if:

,is said to be surjective/epic/onto if

.

is called injective/monic/one-to-one if:

.

that is both surjective and injective is consider bijective.